The Ultimate WorkstationFor Open Source AI

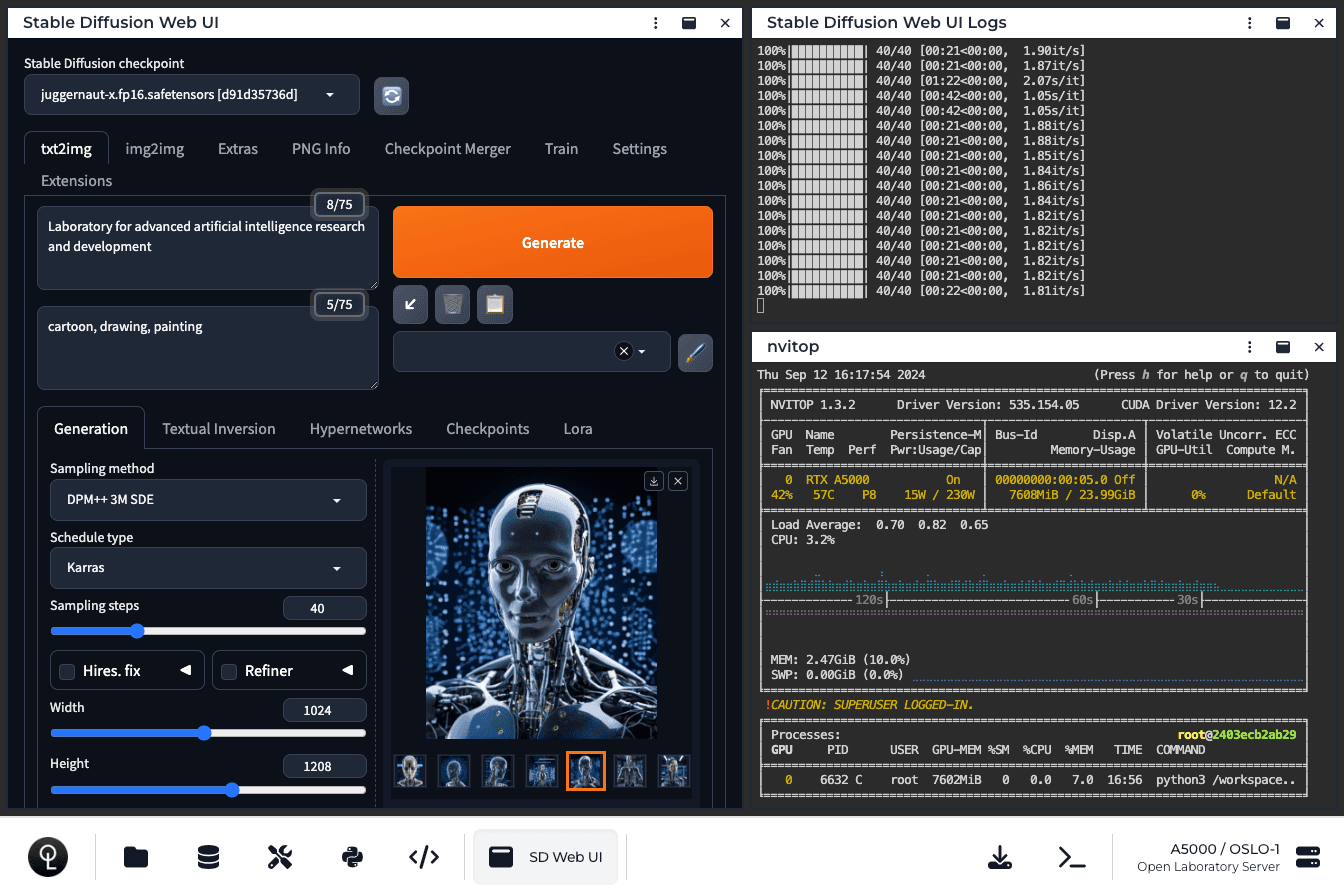

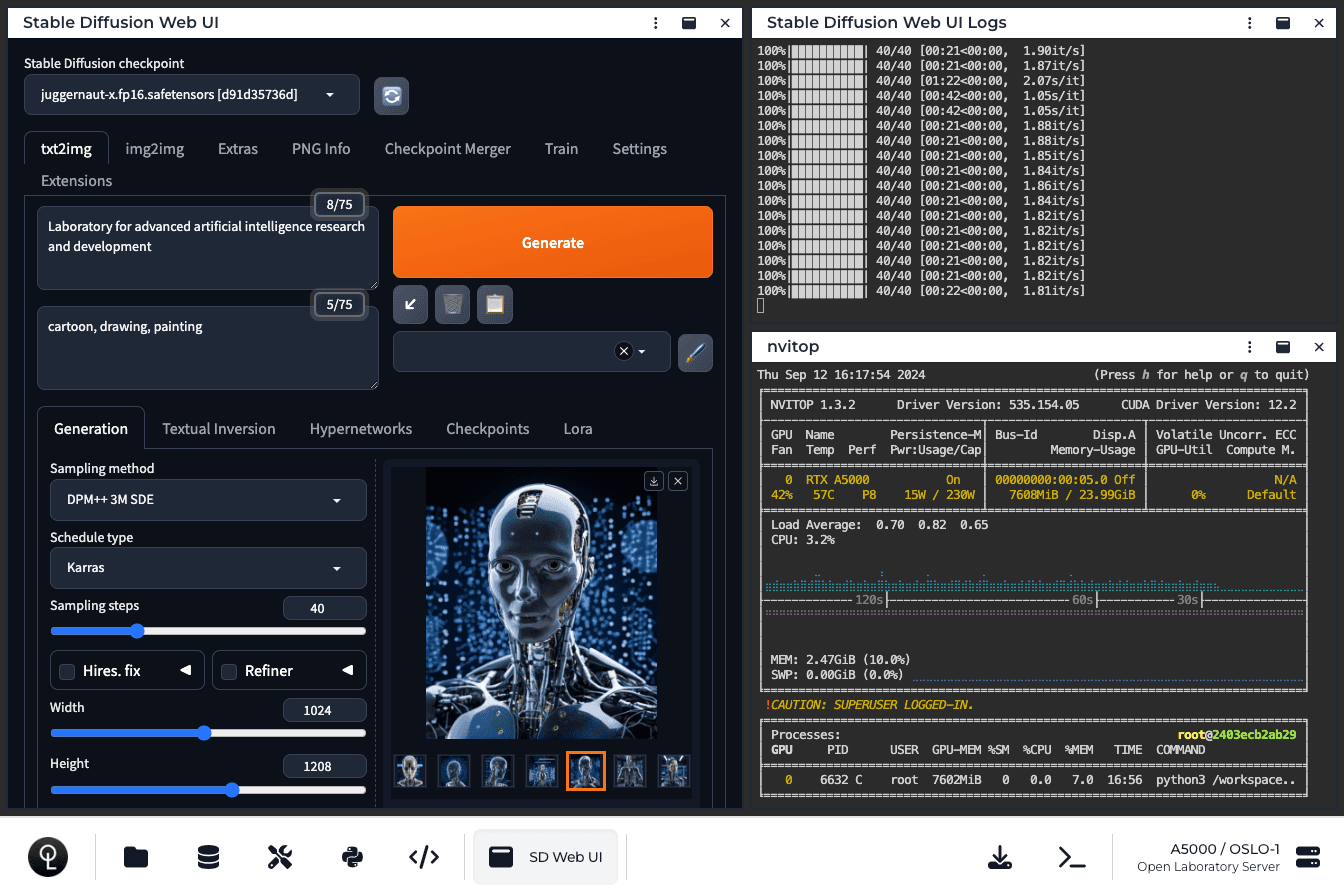

Stable Diffusion Web UI

App Library

Install AI research tools, APIs, and applications with one click.

Generate images and videos using a powerful low-level workflow graph builder - the fastest, most flexible, and most advanced visual generation UI.

Forge is a platform built on top of Stable Diffusion WebUI to make development easier, optimize resource management, speed up inference, and study experimental features.

Simple, intuitive, and powerful image generation. Easily inpaint, outpaint, and upscale. Influence the generation using image prompts.

Train your own LoRAs and finetunes for Stable Diffusion and Flux using this popular GUI for the Kohya trainers.

Generate images and videos using a powerful low-level workflow graph builder - the fastest, most flexible, and most advanced visual generation UI.

Forge is a platform built on top of Stable Diffusion WebUI to make development easier, optimize resource management, speed up inference, and study experimental features.

Simple, intuitive, and powerful image generation. Easily inpaint, outpaint, and upscale. Influence the generation using image prompts.

Train your own LoRAs and finetunes for Stable Diffusion and Flux using this popular GUI for the Kohya trainers.

Generate images and videos using a powerful low-level workflow graph builder - the fastest, most flexible, and most advanced visual generation UI.

Forge is a platform built on top of Stable Diffusion WebUI to make development easier, optimize resource management, speed up inference, and study experimental features.

Simple, intuitive, and powerful image generation. Easily inpaint, outpaint, and upscale. Influence the generation using image prompts.

Train your own LoRAs and finetunes for Stable Diffusion and Flux using this popular GUI for the Kohya trainers.

Generate images and videos using a powerful low-level workflow graph builder - the fastest, most flexible, and most advanced visual generation UI.

Forge is a platform built on top of Stable Diffusion WebUI to make development easier, optimize resource management, speed up inference, and study experimental features.

Simple, intuitive, and powerful image generation. Easily inpaint, outpaint, and upscale. Influence the generation using image prompts.

Open WebUI is an open-source, self-hosted web interface with a polished, ChatGPT-like user experience for interacting with LLMs. Integrates seamlessly with local Ollama installation.

Automatic1111's legendary web UI for Stable Diffusion, the most comprehensive and full-featured AI image generation application in existence.

The most full-featured web interface for experimenting with open source Large Language Models. Featuring a wide range of configurable settings, inference engines, and plugins.

Experiment with various cutting-edge audio generation models, such as Bark (Text-to-Speech), RVC (Voice Cloning), and MusicGen (Text-to-Music).

Open WebUI is an open-source, self-hosted web interface with a polished, ChatGPT-like user experience for interacting with LLMs. Integrates seamlessly with local Ollama installation.

Automatic1111's legendary web UI for Stable Diffusion, the most comprehensive and full-featured AI image generation application in existence.

The most full-featured web interface for experimenting with open source Large Language Models. Featuring a wide range of configurable settings, inference engines, and plugins.

Experiment with various cutting-edge audio generation models, such as Bark (Text-to-Speech), RVC (Voice Cloning), and MusicGen (Text-to-Music).

Open WebUI is an open-source, self-hosted web interface with a polished, ChatGPT-like user experience for interacting with LLMs. Integrates seamlessly with local Ollama installation.

Automatic1111's legendary web UI for Stable Diffusion, the most comprehensive and full-featured AI image generation application in existence.

The most full-featured web interface for experimenting with open source Large Language Models. Featuring a wide range of configurable settings, inference engines, and plugins.

Experiment with various cutting-edge audio generation models, such as Bark (Text-to-Speech), RVC (Voice Cloning), and MusicGen (Text-to-Music).

Open WebUI is an open-source, self-hosted web interface with a polished, ChatGPT-like user experience for interacting with LLMs. Integrates seamlessly with local Ollama installation.

Automatic1111's legendary web UI for Stable Diffusion, the most comprehensive and full-featured AI image generation application in existence.

The most full-featured web interface for experimenting with open source Large Language Models. Featuring a wide range of configurable settings, inference engines, and plugins.

Open Source Moves Fast

Stay on the Cutting Edge

Explore the latest advances in AI research.

Run models and apps on a dedicated cloud GPU server.

Laboratory OS

The Linux Server for Open-Source AI

Cloud GPUs with the simplicity of a local workstation. Use the web desktop to install apps, download models, run advanced workflows, and deploy self-hosted APIs.

Model Library

Browse the latest open-weight models. Or bring your own.

Kimi K2 is an open-source mixture-of-experts language model developed by Moonshot AI, featuring 1 trillion total parameters with 32 billion activated per inference. The model utilizes a 128,000-token context window and specializes in agentic intelligence, tool use, and autonomous reasoning capabilities. Trained on 15.5 trillion tokens with reinforcement learning techniques, it demonstrates performance across coding, mathematical reasoning, and multi-step task execution benchmarks.

Gemma 3n E4B is a multimodal generative AI model developed by Google DeepMind with 8 billion raw parameters yielding 4 billion effective parameters. Built on the MatFormer architecture for mobile and edge deployment, it processes text, image, audio, and video inputs to generate text outputs. The model features elastic inference capabilities, allowing extraction of smaller sub-models for faster performance, and supports over 140 languages with demonstrated proficiency in reasoning, coding, and multilingual tasks.

DeepSeek R1 (0528) is a large language model developed by DeepSeek-AI featuring 671 billion total parameters with 37 billion activated during inference. Built on the DeepSeek-V3-Base architecture using Mixture-of-Experts design, it employs Group Relative Policy Optimization and multi-stage training with reinforcement learning to enhance reasoning capabilities. The model supports 128,000 token context length and demonstrates improved performance on mathematical, coding, and reasoning benchmarks compared to its predecessors.

Qwen3-0.6B is a dense language model with 0.6 billion parameters developed by Alibaba Cloud, featuring a 28-layer transformer architecture with Grouped Query Attention. The model supports dual thinking modes for adaptive reasoning and general dialogue, processes up to 32,768 tokens context length, and demonstrates multilingual capabilities across over 100 languages. It utilizes strong-to-weak distillation from larger Qwen3 models and is released under Apache 2.0 license.

Qwen3-4B is a 4.0 billion parameter transformer language model developed by Alibaba Cloud, featuring dual reasoning modes that allow users to toggle between detailed step-by-step thinking and rapid response generation. Released under Apache 2.0 license, the model supports 32,768 token contexts, demonstrates strong performance across mathematical reasoning and coding benchmarks, and incorporates advanced training techniques including strong-to-weak distillation from larger teacher models.

Qwen3-14B is a dense transformer language model developed by Alibaba Cloud with 14.8 billion parameters, featuring hybrid "thinking" and "non-thinking" reasoning modes that can be controlled via prompts. The model supports 119 languages, extends to 131k token contexts through YaRN scaling, and includes agent capabilities with tool-use functionality, all released under Apache 2.0 license.

Qwen3-30B-A3B is a Mixture-of-Experts language model developed by Alibaba Cloud featuring 30.5 billion total parameters with 3.3 billion activated per token. The model employs hybrid reasoning modes that allow dynamic switching between step-by-step thinking for complex tasks and rapid responses for simpler queries. It supports 119 languages, extends to 131,072 tokens context length, and utilizes strong-to-weak distillation from larger Qwen3 models for efficient deployment while maintaining competitive performance on reasoning, coding, and multilingual benchmarks.

HiDream I1 Full is an open-source image generation model developed by HiDream.ai featuring a 17 billion parameter sparse Diffusion Transformer architecture with Mixture-of-Experts design. The model employs hybrid text encoding combining Long-CLIP, T5-XXL, and Llama 3.1 8B components for precise text-to-image synthesis. It demonstrates strong performance on industry benchmarks and supports diverse visual styles through flow-matching in latent space.

Mistral Small 3.1 (2503) is a 24-billion parameter transformer-based model developed by Mistral AI and released under Apache 2.0 license. This multimodal and multilingual model processes both text and visual inputs with a context window of 128,000 tokens using the Tekken tokenizer. It demonstrates competitive performance on academic benchmarks including MMLU and GPQA while supporting function calling and structured output generation for automation workflows.

Gemma 3 4B is a multimodal instruction-tuned model developed by Google DeepMind that processes text and image inputs to generate text outputs. The model features a decoder-only transformer architecture with approximately 4.3 billion parameters, supports context windows up to 128,000 tokens, and operates across over 140 languages. It incorporates a SigLIP vision encoder for image processing and utilizes grouped-query attention with interleaved local and global attention layers for efficient long-context handling.

Gemma 3 27B is a multimodal generative AI model developed by Google DeepMind that processes both text and image inputs to produce text outputs. Built on a decoder-only transformer architecture with 27 billion parameters, it incorporates a SigLIP vision encoder and supports context lengths up to 128,000 tokens. The model was trained on over 14 trillion tokens and demonstrates competitive performance across language, coding, mathematical reasoning, and vision-language tasks.

QwQ 32B is a 32.5-billion parameter causal language model developed by Alibaba Cloud as part of the Qwen series. The model employs a transformer architecture with 64 layers and Grouped Query Attention, trained using supervised fine-tuning and reinforcement learning focused on mathematical reasoning and coding proficiency. Released under Apache 2.0 license, it demonstrates competitive performance on reasoning benchmarks despite its relatively compact size.

Wan 2.1 I2V 14B 480P is an image-to-video generation model developed by Wan-AI featuring 14 billion parameters and operating at 480P resolution. Built on a diffusion transformer architecture with T5-based text encoding and a 3D causal variational autoencoder, the model transforms static images into temporally coherent video sequences guided by textual prompts, supporting both Chinese and English text rendering within its generative capabilities.

Wan 2.1 T2V 14B is a 14-billion parameter video generation model developed by Wan-AI that creates videos from text descriptions or images. The model employs a spatio-temporal variational autoencoder and diffusion transformer architecture to generate content at 480P and 720P resolutions. It supports multiple languages including Chinese and English, handles various video generation tasks, and demonstrates computational efficiency across different hardware configurations when deployed for research applications.

Qwen2.5 VL 7B is a 7-billion parameter multimodal language model developed by Alibaba Cloud that processes text, images, and video inputs. The model features a Vision Transformer with dynamic resolution support and Multimodal Rotary Position Embedding for spatial-temporal understanding. It demonstrates capabilities in document analysis, OCR, object detection, video comprehension, and structured output generation across multiple languages, released under Apache-2.0 license.

Lumina Image 2.0 is a 2 billion parameter text-to-image generative model developed by Alpha-VLLM that utilizes a flow-based diffusion transformer architecture. The model generates high-fidelity images up to 1024x1024 pixels from textual descriptions, employs a Gemma-2-2B text encoder and FLUX-VAE-16CH variational autoencoder, and is released under the Apache-2.0 license with support for multiple inference solvers and fine-tuning capabilities.

MiniMax Text 01 is an open-source large language model developed by MiniMaxAI featuring 456 billion total parameters with 45.9 billion active per token. The model employs a hybrid attention mechanism combining Lightning Attention with periodic Softmax Attention layers across 80 transformer layers, utilizing a Mixture-of-Experts design with 32 experts and Top-2 routing. It supports context lengths up to 4 million tokens during inference and demonstrates competitive performance across text generation, reasoning, and coding benchmarks.

DeepSeek-VL2 is a series of Mixture-of-Experts vision-language models developed by DeepSeek-AI that integrates visual and textual understanding through a decoder-only architecture. The models utilize a SigLIP vision encoder with dynamic tiling for high-resolution image processing, coupled with DeepSeekMoE language components featuring Multi-head Latent Attention. Available in three variants with 1.0B, 2.8B, and 4.5B activated parameters, the models support multimodal tasks including visual question answering, optical character recognition, document analysis, and visual grounding capabilities.

DeepSeek VL2 Tiny is a vision-language model from Deepseek AI that activates 1.0 billion parameters using Mixture-of-Experts architecture. The model combines a SigLIP vision encoder with a DeepSeekMoE-based language component to handle multimodal tasks including visual question answering, optical character recognition, document analysis, and visual grounding across images and text.

Llama 3.3 70B is a 70-billion parameter transformer-based language model developed by Meta, featuring instruction tuning through supervised fine-tuning and reinforcement learning from human feedback. The model supports a 128,000-token context window, incorporates Grouped-Query Attention for enhanced inference efficiency, and demonstrates multilingual capabilities across eight validated languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

CogVideoX 1.5 5B is an open-source video generation model developed by THUDM that creates high-resolution videos up to 1360x768 resolution from text prompts and images. The model employs a 3D causal variational autoencoder with 8x8x4 compression and an expert transformer architecture featuring adaptive LayerNorm for multimodal alignment. It supports both text-to-video and image-to-video synthesis with durations of 5-10 seconds at 16 fps, released under Apache 2.0 license.

QwQ 32B Preview is an experimental large language model developed by Alibaba Cloud's Qwen Team, built on the Qwen 2 architecture with 32.5 billion parameters. The model specializes in mathematical and coding reasoning tasks, achieving 65.2% on GPQA, 50.0% on AIME, 90.6% on MATH-500, and 50.0% on LiveCodeBench benchmarks through curiosity-driven, reflective analysis approaches.

Stable Diffusion 3.5 Large is an 8.1-billion-parameter text-to-image model utilizing Multimodal Diffusion Transformer architecture with Query-Key Normalization for enhanced training stability. The model generates images up to 1-megapixel resolution across diverse styles including photorealism, illustration, and digital art. It employs three text encoders supporting up to 256 tokens and demonstrates strong prompt adherence capabilities.

CogVideoX-5B-I2V is an open-source image-to-video generative AI model developed by THUDM that produces 6-second videos at 720×480 resolution from input images and English text prompts. The model employs a diffusion transformer architecture with 3D Causal VAE compression and generates 49 frames at 8 fps, supporting various video synthesis applications through its controllable conditioning mechanism.

Qwen 2.5 Math 7B is a 7.62-billion parameter language model developed by Alibaba Cloud that specializes in mathematical reasoning tasks in English and Chinese. The model employs chain-of-thought reasoning and tool-integrated approaches using Python interpreters for computational tasks. It demonstrates improved performance over its predecessor on mathematical benchmarks including MATH, GSM8K, and Chinese mathematics evaluations, achieving 83.6 on MATH using chain-of-thought methods.

Qwen2.5-Coder-7B is a 7.61 billion parameter transformer-based language model developed by Alibaba Cloud's Qwen Team, specialized for code generation and reasoning across 92 programming languages. The model features a 128,000-token context window, supports fill-in-the-middle code completion, and was trained on 5.5 trillion tokens of code and text data, demonstrating competitive performance on coding benchmarks like HumanEval and mathematical reasoning tasks.

Qwen 2.5 14B is a 14.7 billion parameter transformer-based language model developed by Alibaba Cloud's Qwen Team, featuring a 128,000 token context window and support for over 29 languages. The model utilizes advanced architectural components including Grouped Query Attention, RoPE embeddings, and SwiGLU activation, and was pretrained on up to 18 trillion tokens of diverse multilingual data for applications in reasoning, coding, and mathematical tasks.

Qwen 2.5 72B is a 72.7 billion parameter transformer-based language model developed by Alibaba Cloud's Qwen Team, released in September 2024. The model features a 128,000-token context window, supports over 29 languages, and demonstrates strong performance on coding, mathematical reasoning, and knowledge benchmarks. Built with architectural improvements including RoPE and SwiGLU activation functions, it excels at structured data handling and serves as a foundation model for fine-tuning applications.

Command R (08-2024) is a 32-billion parameter generative language model developed by Cohere, featuring a 128,000-token context window and support for 23 languages. The model incorporates Grouped Query Attention for enhanced inference efficiency and specializes in retrieval-augmented generation with citation capabilities, tool use, and multilingual comprehension. It demonstrates improved throughput and reduced latency compared to previous versions while offering configurable safety modes for enterprise applications.

Phi-3.5 Mini Instruct is a 3.8 billion parameter decoder-only Transformer model developed by Microsoft that supports multilingual text generation with a 128,000-token context window. The model demonstrates competitive performance across 22 languages and excels in reasoning, code generation, and long-context tasks, achieving an average benchmark score of 61.4 while maintaining efficient resource utilization.

AuraFlow v0.3 is a 6.8 billion parameter, flow-based text-to-image generative model developed by fal.ai. Built on an optimized DiT architecture with Maximal Update Parametrization, it features enhanced prompt following capabilities through comprehensive recaptioning and prompt enhancement pipelines. The model supports multiple aspect ratios and achieved a GenEval score of 0.703, demonstrating effective text-to-image synthesis across diverse artistic styles and photorealistic outputs.

Stable Fast 3D is a transformer-based generative AI model developed by Stability AI that reconstructs textured 3D mesh assets from single input images in approximately 0.5 seconds. The model predicts comprehensive material properties including albedo, roughness, and metallicity, producing UV-unwrapped meshes suitable for integration into rendering pipelines and interactive applications across gaming, virtual reality, and design workflows.

FLUX.1 [schnell] is a 12-billion parameter text-to-image generation model developed by Black Forest Labs using hybrid diffusion transformer architecture with rectified flow and latent adversarial diffusion distillation. The model generates images from text descriptions in 1-4 diffusion steps, supporting variable resolutions and aspect ratios. Released under Apache 2.0 license, it employs flow matching techniques and parallel attention layers for efficient synthesis.

Mistral Large 2 is a dense transformer-based language model developed by Mistral AI with 123 billion parameters and a 128,000-token context window. The model demonstrates strong performance across multilingual tasks, code generation in 80+ programming languages, mathematical reasoning, and function calling capabilities. It achieves 84% on MMLU, 92% on HumanEval, and 93% on GSM8K benchmarks while maintaining concise output generation.

Mistral NeMo 12B is a transformer-based language model developed collaboratively by Mistral AI and NVIDIA, featuring 12 billion parameters and a 128,000-token context window. The model incorporates grouped query attention, quantization-aware training for FP8 inference, and utilizes the custom Tekken tokenizer for improved multilingual and code compression efficiency. Available in both base and instruction-tuned variants, it demonstrates competitive performance on standard benchmarks while supporting function calling and multilingual capabilities across numerous languages including English, Chinese, Arabic, and various European languages.

Llama 3.1 70B is a transformer-based decoder language model developed by Meta with 70 billion parameters, trained on approximately 15 trillion tokens with a 128K context window. The model supports eight languages and demonstrates competitive performance across benchmarks for reasoning, coding, mathematics, and multilingual tasks. It is available under the Llama 3.1 Community License Agreement for research and commercial applications.

Gemma 2 9B is an open-weights decoder-only transformer language model developed by Google as part of the Gemma family. Trained on 8 trillion tokens using TPUv5p infrastructure, the model supports English text generation, question answering, and summarization tasks. Available in both pre-trained and instruction-tuned versions with bfloat16 precision, it demonstrates competitive performance on benchmarks like MMLU and coding evaluations while incorporating safety filtering mechanisms.

DeepSeek Coder V2 Lite is an open-source Mixture-of-Experts code language model featuring 16 billion total parameters with 2.4 billion active parameters during inference. The model supports 338 programming languages, processes up to 128,000 tokens of context, and demonstrates competitive performance on code generation benchmarks including 81.1% accuracy on Python HumanEval tasks.

Qwen2-72B is a 72.71 billion parameter Transformer-based language model developed by Alibaba Cloud, featuring Group Query Attention and SwiGLU activation functions. The model demonstrates strong performance across diverse benchmarks including MMLU (84.2), HumanEval (64.6), and GSM8K (89.5), with multilingual capabilities spanning 27 languages and extended context handling up to 128,000 tokens for specialized applications.

Yi 1.5 34B is a 34.4 billion parameter decoder-only Transformer language model developed by 01.AI, featuring Grouped-Query Attention and SwiGLU activations. Trained on 3.1 trillion bilingual tokens, it demonstrates capabilities in reasoning, mathematics, and code generation, with variants supporting up to 200,000 token contexts and multimodal understanding through vision-language extensions.

DeepSeek V2 is a large-scale Mixture-of-Experts language model with 236 billion total parameters, activating only 21 billion per token. It features Multi-head Latent Attention for reduced memory usage and supports context lengths up to 128,000 tokens. Trained on 8.1 trillion tokens with emphasis on English and Chinese data, it demonstrates competitive performance across language understanding, code generation, and mathematical reasoning tasks while achieving significant efficiency improvements over dense models.

Phi-3 Mini Instruct is a 3.8 billion parameter instruction-tuned language model developed by Microsoft using a dense decoder-only Transformer architecture. The model supports a 128,000 token context window and was trained on 4.9 trillion tokens of high-quality data, followed by supervised fine-tuning and direct preference optimization. It demonstrates competitive performance in reasoning, mathematics, and code generation tasks among models under 13 billion parameters, with particular strengths in long-context understanding and structured output generation.

Llama 3 8B is an open-weights transformer-based language model developed by Meta, featuring 8 billion parameters and trained on over 15 trillion tokens. The model utilizes grouped-query attention and a 128,000-token vocabulary, supporting 8,192-token context lengths. Available in both pretrained and instruction-tuned variants, it demonstrates capabilities in text generation, code completion, and conversational tasks across multiple languages.

Llama 4 Scout (17Bx16E) is a multimodal large language model developed by Meta using a Mixture-of-Experts transformer architecture with 109 billion total parameters and 17 billion active parameters per token. The model features a 10 million token context window, supports text and image understanding across multiple languages, and was trained on approximately 40 trillion tokens with an August 2024 knowledge cutoff.

Command R+ v01 is a 104-billion parameter open-weights language model developed by Cohere, optimized for retrieval-augmented generation, tool use, and multilingual tasks. The model features a 128,000-token context window and specializes in generating outputs with inline citations from retrieved documents. It supports automated tool calling, demonstrates competitive performance across standard benchmarks, and includes efficient tokenization for non-English languages, making it suitable for enterprise applications requiring factual accuracy and transparency.

Command R v01 is a 35-billion-parameter transformer-based language model developed by Cohere, featuring retrieval-augmented generation with explicit citations, tool use capabilities, and multilingual support across ten languages. The model supports a 128,000-token context window and demonstrates performance in enterprise applications, multi-step reasoning tasks, and long-context evaluations, though it requires commercial licensing for enterprise use.

Playground v2.5 Aesthetic is a diffusion-based text-to-image model that generates images at 1024x1024 resolution across multiple aspect ratios. Developed by Playground and released in February 2024, it employs the EDM training framework and human preference alignment techniques to improve color vibrancy, contrast, and human feature rendering compared to its predecessor and other open-source models like Stable Diffusion XL.

Stable Cascade Stage B is an intermediate latent super-resolution component within Stability AI's three-stage text-to-image generation system built on the Würstchen architecture. It operates as a diffusion model that upscales compressed 16×24×24 latents from Stage C to 4×256×256 representations, preserving semantic content while restoring fine details. Available in 700M and 1.5B parameter versions, Stage B enables the system's efficient 42:1 compression ratio and supports extensions like ControlNet and LoRA for enhanced creative workflows.

Stable Video Diffusion XT 1.1 is a latent diffusion model developed by Stability AI that generates 25-frame video sequences at 1024x576 resolution from single input images. The model employs a three-stage training process including image pretraining, video training on curated datasets, and high-resolution finetuning, enabling motion synthesis with configurable camera controls and temporal consistency for image-to-video transformation applications.

Qwen 1.5 72B is a 72-billion parameter transformer-based language model developed by Alibaba Cloud's Qwen Team and released in February 2024. The model supports a 32,768-token context window and demonstrates strong multilingual capabilities across 12 languages, achieving competitive performance on benchmarks including MMLU (77.5), C-Eval (84.1), and GSM8K (79.5). It features alignment optimization through Direct Policy Optimization and Proximal Policy Optimization techniques, enabling effective instruction-following and integration with external systems for applications including retrieval-augmented generation and code interpretation.

The SDXL Motion Model is an AnimateDiff-based video generation framework that adds temporal animation capabilities to existing text-to-image diffusion models. Built for compatibility with SDXL at 1024×1024 resolution, it employs a plug-and-play motion module trained on video datasets to generate coherent animated sequences while preserving the visual style of the underlying image model.

Phi-2 is a 2.7 billion parameter Transformer-based language model developed by Microsoft Research and released in December 2023. The model was trained on approximately 1.4 trillion tokens using a "textbook-quality" data approach, incorporating synthetic data from GPT-3.5 and filtered web sources. Phi-2 demonstrates competitive performance in reasoning, language understanding, and code generation tasks compared to larger models in its parameter class.

Mixtral 8x7B is a sparse Mixture of Experts language model developed by Mistral AI and released under the Apache 2.0 license in December 2023. The model uses a decoder-only transformer architecture with eight expert networks per layer, activating only two experts per token, resulting in 12.9 billion active parameters from a total 46.7 billion. It demonstrates competitive performance on benchmarks including MMLU, achieving multilingual capabilities across English, French, German, Spanish, and Italian while maintaining efficient inference speeds.

Playground v2 Aesthetic is a latent diffusion text-to-image model developed by playgroundai that generates 1024x1024 pixel images using dual pre-trained text encoders (OpenCLIP-ViT/G and CLIP-ViT/L). The model achieved a 7.07 FID score on the MJHQ-30K benchmark and demonstrated a 2.5x preference rate over Stable Diffusion XL in user studies, focusing on high-aesthetic image synthesis with strong prompt alignment.

Stable Video Diffusion XT is a generative AI model developed by Stability AI that extends the Stable Diffusion architecture for video synthesis. The model supports image-to-video and text-to-video generation, producing up to 25 frames at resolutions supporting 3-30 fps. Built on a latent video diffusion architecture with over 1.5 billion parameters, SVD-XT incorporates temporal modeling layers and was trained using a three-stage methodology on curated video datasets.

Yi 1 34B is a bilingual transformer-based language model developed by 01.AI, trained on 3 trillion tokens with support for both English and Chinese. The model features a 4,096-token context window and demonstrates competitive performance on multilingual benchmarks including MMLU, CMMLU, and C-Eval, with variants available including extended 200K context and chat-optimized versions released under Apache 2.0 license.

MusicGen is a text-to-music generation model developed by Meta's FAIR team as part of the AudioCraft library. The model uses a two-stage architecture combining EnCodec neural audio compression with a transformer-based autoregressive language model to generate musical audio from textual descriptions or melody inputs. Trained on approximately 20,000 hours of licensed music, MusicGen supports both monophonic and stereophonic outputs and demonstrates competitive performance in objective and subjective evaluations against contemporary music generation models.

Vocos is a neural vocoder developed by GemeloAI that employs a Fourier-based architecture to generate Short-Time Fourier Transform spectral coefficients rather than directly modeling time-domain waveforms. The model supports both mel-spectrogram and neural audio codec token inputs, operates under the MIT license, and demonstrates computational efficiency through its use of inverse STFT for audio reconstruction while achieving competitive performance metrics on speech and music synthesis tasks.

CodeLlama 34B is a large language model developed by Meta that builds upon Llama 2's architecture and is optimized for code generation, understanding, and programming tasks. The model supports multiple programming languages including Python, C++, Java, and JavaScript, with an extended context window of up to 100,000 tokens for handling large codebases. Available in three variants (Base, Python-specialized, and Instruct), it achieved 53.7% accuracy on HumanEval and 56.2% on MBPP benchmarks, demonstrating capabilities in code completion, debugging, and natural language explanations.

Llama 2 7B is a transformer-based language model developed by Meta with 7 billion parameters, trained on 2 trillion tokens with a 4,096-token context length. The model supports text generation in English and 27 other languages, with chat-optimized variants fine-tuned using supervised learning and reinforcement learning from human feedback for dialogue applications.

Llama 2 70B is a 70-billion parameter transformer-based language model developed by Meta, featuring Grouped-Query Attention and a 4096-token context window. Trained on 2 trillion tokens with a September 2022 cutoff, it demonstrates strong performance across language benchmarks including 68.9 on MMLU and 37.5 pass@1 on code generation tasks, while offering both pretrained and chat-optimized variants under Meta's commercial license.

Bark is a transformer-based text-to-audio model that generates multilingual speech, music, and sound effects by converting text directly to audio tokens using EnCodec quantization. The model supports over 13 languages with 100+ speaker presets and can produce nonverbal sounds like laughter through special tokens, operating via a three-stage pipeline from semantic to fine audio tokens.

LLaMA 13B is a transformer-based language model developed by Meta as part of the LLaMA model family, featuring 13 billion parameters and trained on 1.4 trillion tokens from publicly available datasets. The model incorporates architectural optimizations including RMSNorm, SwiGLU activation functions, and rotary positional embeddings, achieving competitive performance with larger models while maintaining efficiency. Released under a noncommercial research license, it demonstrates capabilities across language understanding, reasoning, and code generation benchmarks.

LLaMA 65B is a 65.2 billion parameter transformer-based language model developed by Meta and released in February 2023. The model utilizes architectural optimizations including RMSNorm pre-normalization, SwiGLU activation functions, and rotary positional embeddings. Trained exclusively on 1.4 trillion tokens from publicly available datasets including CommonCrawl, Wikipedia, GitHub, and arXiv, it demonstrates competitive performance across natural language understanding benchmarks while being distributed under a non-commercial research license.

Stable Diffusion 2 is an open-source text-to-image diffusion model developed by Stability AI that generates images at resolutions up to 768×768 pixels using latent diffusion techniques. The model employs an OpenCLIP-ViT/H text encoder and was trained on filtered subsets of the LAION-5B dataset. It includes specialized variants for inpainting, depth-conditioned generation, and 4x upscaling, offering improved capabilities over earlier versions while maintaining open accessibility for research applications.

Stable Diffusion 1.5 is a latent text-to-image diffusion model that generates 512x512 images from text prompts using a U-Net architecture conditioned on CLIP text embeddings within a compressed latent space. Trained on LAION dataset subsets, the model supports text-to-image generation, image-to-image translation, and inpainting tasks, released under the CreativeML OpenRAIL-M license for research and commercial applications.

Stable Diffusion 1.1 is a latent text-to-image diffusion model developed by CompVis, Stability AI, and Runway that generates images from natural language prompts. The model uses a VAE to compress images into latent space, a U-Net for denoising, and a CLIP text encoder for conditioning. Trained on LAION dataset subsets at 512×512 resolution, it supports text-to-image generation, image-to-image translation, and inpainting applications while operating efficiently in compressed latent space.

Kimi K2 is an open-source mixture-of-experts language model developed by Moonshot AI, featuring 1 trillion total parameters with 32 billion activated per inference. The model utilizes a 128,000-token context window and specializes in agentic intelligence, tool use, and autonomous reasoning capabilities. Trained on 15.5 trillion tokens with reinforcement learning techniques, it demonstrates performance across coding, mathematical reasoning, and multi-step task execution benchmarks.

Gemma 3n E4B is a multimodal generative AI model developed by Google DeepMind with 8 billion raw parameters yielding 4 billion effective parameters. Built on the MatFormer architecture for mobile and edge deployment, it processes text, image, audio, and video inputs to generate text outputs. The model features elastic inference capabilities, allowing extraction of smaller sub-models for faster performance, and supports over 140 languages with demonstrated proficiency in reasoning, coding, and multilingual tasks.

DeepSeek R1 (0528) is a large language model developed by DeepSeek-AI featuring 671 billion total parameters with 37 billion activated during inference. Built on the DeepSeek-V3-Base architecture using Mixture-of-Experts design, it employs Group Relative Policy Optimization and multi-stage training with reinforcement learning to enhance reasoning capabilities. The model supports 128,000 token context length and demonstrates improved performance on mathematical, coding, and reasoning benchmarks compared to its predecessors.

Qwen3-0.6B is a dense language model with 0.6 billion parameters developed by Alibaba Cloud, featuring a 28-layer transformer architecture with Grouped Query Attention. The model supports dual thinking modes for adaptive reasoning and general dialogue, processes up to 32,768 tokens context length, and demonstrates multilingual capabilities across over 100 languages. It utilizes strong-to-weak distillation from larger Qwen3 models and is released under Apache 2.0 license.

Qwen3-4B is a 4.0 billion parameter transformer language model developed by Alibaba Cloud, featuring dual reasoning modes that allow users to toggle between detailed step-by-step thinking and rapid response generation. Released under Apache 2.0 license, the model supports 32,768 token contexts, demonstrates strong performance across mathematical reasoning and coding benchmarks, and incorporates advanced training techniques including strong-to-weak distillation from larger teacher models.

Qwen3-14B is a dense transformer language model developed by Alibaba Cloud with 14.8 billion parameters, featuring hybrid "thinking" and "non-thinking" reasoning modes that can be controlled via prompts. The model supports 119 languages, extends to 131k token contexts through YaRN scaling, and includes agent capabilities with tool-use functionality, all released under Apache 2.0 license.

Qwen3-30B-A3B is a Mixture-of-Experts language model developed by Alibaba Cloud featuring 30.5 billion total parameters with 3.3 billion activated per token. The model employs hybrid reasoning modes that allow dynamic switching between step-by-step thinking for complex tasks and rapid responses for simpler queries. It supports 119 languages, extends to 131,072 tokens context length, and utilizes strong-to-weak distillation from larger Qwen3 models for efficient deployment while maintaining competitive performance on reasoning, coding, and multilingual benchmarks.

HiDream I1 Full is an open-source image generation model developed by HiDream.ai featuring a 17 billion parameter sparse Diffusion Transformer architecture with Mixture-of-Experts design. The model employs hybrid text encoding combining Long-CLIP, T5-XXL, and Llama 3.1 8B components for precise text-to-image synthesis. It demonstrates strong performance on industry benchmarks and supports diverse visual styles through flow-matching in latent space.

Mistral Small 3.1 (2503) is a 24-billion parameter transformer-based model developed by Mistral AI and released under Apache 2.0 license. This multimodal and multilingual model processes both text and visual inputs with a context window of 128,000 tokens using the Tekken tokenizer. It demonstrates competitive performance on academic benchmarks including MMLU and GPQA while supporting function calling and structured output generation for automation workflows.

Gemma 3 4B is a multimodal instruction-tuned model developed by Google DeepMind that processes text and image inputs to generate text outputs. The model features a decoder-only transformer architecture with approximately 4.3 billion parameters, supports context windows up to 128,000 tokens, and operates across over 140 languages. It incorporates a SigLIP vision encoder for image processing and utilizes grouped-query attention with interleaved local and global attention layers for efficient long-context handling.

Gemma 3 27B is a multimodal generative AI model developed by Google DeepMind that processes both text and image inputs to produce text outputs. Built on a decoder-only transformer architecture with 27 billion parameters, it incorporates a SigLIP vision encoder and supports context lengths up to 128,000 tokens. The model was trained on over 14 trillion tokens and demonstrates competitive performance across language, coding, mathematical reasoning, and vision-language tasks.

QwQ 32B is a 32.5-billion parameter causal language model developed by Alibaba Cloud as part of the Qwen series. The model employs a transformer architecture with 64 layers and Grouped Query Attention, trained using supervised fine-tuning and reinforcement learning focused on mathematical reasoning and coding proficiency. Released under Apache 2.0 license, it demonstrates competitive performance on reasoning benchmarks despite its relatively compact size.

Wan 2.1 I2V 14B 480P is an image-to-video generation model developed by Wan-AI featuring 14 billion parameters and operating at 480P resolution. Built on a diffusion transformer architecture with T5-based text encoding and a 3D causal variational autoencoder, the model transforms static images into temporally coherent video sequences guided by textual prompts, supporting both Chinese and English text rendering within its generative capabilities.

Wan 2.1 T2V 14B is a 14-billion parameter video generation model developed by Wan-AI that creates videos from text descriptions or images. The model employs a spatio-temporal variational autoencoder and diffusion transformer architecture to generate content at 480P and 720P resolutions. It supports multiple languages including Chinese and English, handles various video generation tasks, and demonstrates computational efficiency across different hardware configurations when deployed for research applications.

Qwen2.5 VL 7B is a 7-billion parameter multimodal language model developed by Alibaba Cloud that processes text, images, and video inputs. The model features a Vision Transformer with dynamic resolution support and Multimodal Rotary Position Embedding for spatial-temporal understanding. It demonstrates capabilities in document analysis, OCR, object detection, video comprehension, and structured output generation across multiple languages, released under Apache-2.0 license.

Lumina Image 2.0 is a 2 billion parameter text-to-image generative model developed by Alpha-VLLM that utilizes a flow-based diffusion transformer architecture. The model generates high-fidelity images up to 1024x1024 pixels from textual descriptions, employs a Gemma-2-2B text encoder and FLUX-VAE-16CH variational autoencoder, and is released under the Apache-2.0 license with support for multiple inference solvers and fine-tuning capabilities.

MiniMax Text 01 is an open-source large language model developed by MiniMaxAI featuring 456 billion total parameters with 45.9 billion active per token. The model employs a hybrid attention mechanism combining Lightning Attention with periodic Softmax Attention layers across 80 transformer layers, utilizing a Mixture-of-Experts design with 32 experts and Top-2 routing. It supports context lengths up to 4 million tokens during inference and demonstrates competitive performance across text generation, reasoning, and coding benchmarks.

DeepSeek-VL2 is a series of Mixture-of-Experts vision-language models developed by DeepSeek-AI that integrates visual and textual understanding through a decoder-only architecture. The models utilize a SigLIP vision encoder with dynamic tiling for high-resolution image processing, coupled with DeepSeekMoE language components featuring Multi-head Latent Attention. Available in three variants with 1.0B, 2.8B, and 4.5B activated parameters, the models support multimodal tasks including visual question answering, optical character recognition, document analysis, and visual grounding capabilities.

DeepSeek VL2 Tiny is a vision-language model from Deepseek AI that activates 1.0 billion parameters using Mixture-of-Experts architecture. The model combines a SigLIP vision encoder with a DeepSeekMoE-based language component to handle multimodal tasks including visual question answering, optical character recognition, document analysis, and visual grounding across images and text.

Llama 3.3 70B is a 70-billion parameter transformer-based language model developed by Meta, featuring instruction tuning through supervised fine-tuning and reinforcement learning from human feedback. The model supports a 128,000-token context window, incorporates Grouped-Query Attention for enhanced inference efficiency, and demonstrates multilingual capabilities across eight validated languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

CogVideoX 1.5 5B is an open-source video generation model developed by THUDM that creates high-resolution videos up to 1360x768 resolution from text prompts and images. The model employs a 3D causal variational autoencoder with 8x8x4 compression and an expert transformer architecture featuring adaptive LayerNorm for multimodal alignment. It supports both text-to-video and image-to-video synthesis with durations of 5-10 seconds at 16 fps, released under Apache 2.0 license.

QwQ 32B Preview is an experimental large language model developed by Alibaba Cloud's Qwen Team, built on the Qwen 2 architecture with 32.5 billion parameters. The model specializes in mathematical and coding reasoning tasks, achieving 65.2% on GPQA, 50.0% on AIME, 90.6% on MATH-500, and 50.0% on LiveCodeBench benchmarks through curiosity-driven, reflective analysis approaches.

Stable Diffusion 3.5 Large is an 8.1-billion-parameter text-to-image model utilizing Multimodal Diffusion Transformer architecture with Query-Key Normalization for enhanced training stability. The model generates images up to 1-megapixel resolution across diverse styles including photorealism, illustration, and digital art. It employs three text encoders supporting up to 256 tokens and demonstrates strong prompt adherence capabilities.

CogVideoX-5B-I2V is an open-source image-to-video generative AI model developed by THUDM that produces 6-second videos at 720×480 resolution from input images and English text prompts. The model employs a diffusion transformer architecture with 3D Causal VAE compression and generates 49 frames at 8 fps, supporting various video synthesis applications through its controllable conditioning mechanism.

Qwen 2.5 Math 7B is a 7.62-billion parameter language model developed by Alibaba Cloud that specializes in mathematical reasoning tasks in English and Chinese. The model employs chain-of-thought reasoning and tool-integrated approaches using Python interpreters for computational tasks. It demonstrates improved performance over its predecessor on mathematical benchmarks including MATH, GSM8K, and Chinese mathematics evaluations, achieving 83.6 on MATH using chain-of-thought methods.

Qwen2.5-Coder-7B is a 7.61 billion parameter transformer-based language model developed by Alibaba Cloud's Qwen Team, specialized for code generation and reasoning across 92 programming languages. The model features a 128,000-token context window, supports fill-in-the-middle code completion, and was trained on 5.5 trillion tokens of code and text data, demonstrating competitive performance on coding benchmarks like HumanEval and mathematical reasoning tasks.

Qwen 2.5 14B is a 14.7 billion parameter transformer-based language model developed by Alibaba Cloud's Qwen Team, featuring a 128,000 token context window and support for over 29 languages. The model utilizes advanced architectural components including Grouped Query Attention, RoPE embeddings, and SwiGLU activation, and was pretrained on up to 18 trillion tokens of diverse multilingual data for applications in reasoning, coding, and mathematical tasks.

Qwen 2.5 72B is a 72.7 billion parameter transformer-based language model developed by Alibaba Cloud's Qwen Team, released in September 2024. The model features a 128,000-token context window, supports over 29 languages, and demonstrates strong performance on coding, mathematical reasoning, and knowledge benchmarks. Built with architectural improvements including RoPE and SwiGLU activation functions, it excels at structured data handling and serves as a foundation model for fine-tuning applications.

Command R (08-2024) is a 32-billion parameter generative language model developed by Cohere, featuring a 128,000-token context window and support for 23 languages. The model incorporates Grouped Query Attention for enhanced inference efficiency and specializes in retrieval-augmented generation with citation capabilities, tool use, and multilingual comprehension. It demonstrates improved throughput and reduced latency compared to previous versions while offering configurable safety modes for enterprise applications.

Phi-3.5 Mini Instruct is a 3.8 billion parameter decoder-only Transformer model developed by Microsoft that supports multilingual text generation with a 128,000-token context window. The model demonstrates competitive performance across 22 languages and excels in reasoning, code generation, and long-context tasks, achieving an average benchmark score of 61.4 while maintaining efficient resource utilization.

AuraFlow v0.3 is a 6.8 billion parameter, flow-based text-to-image generative model developed by fal.ai. Built on an optimized DiT architecture with Maximal Update Parametrization, it features enhanced prompt following capabilities through comprehensive recaptioning and prompt enhancement pipelines. The model supports multiple aspect ratios and achieved a GenEval score of 0.703, demonstrating effective text-to-image synthesis across diverse artistic styles and photorealistic outputs.

Stable Fast 3D is a transformer-based generative AI model developed by Stability AI that reconstructs textured 3D mesh assets from single input images in approximately 0.5 seconds. The model predicts comprehensive material properties including albedo, roughness, and metallicity, producing UV-unwrapped meshes suitable for integration into rendering pipelines and interactive applications across gaming, virtual reality, and design workflows.

FLUX.1 [schnell] is a 12-billion parameter text-to-image generation model developed by Black Forest Labs using hybrid diffusion transformer architecture with rectified flow and latent adversarial diffusion distillation. The model generates images from text descriptions in 1-4 diffusion steps, supporting variable resolutions and aspect ratios. Released under Apache 2.0 license, it employs flow matching techniques and parallel attention layers for efficient synthesis.

Mistral Large 2 is a dense transformer-based language model developed by Mistral AI with 123 billion parameters and a 128,000-token context window. The model demonstrates strong performance across multilingual tasks, code generation in 80+ programming languages, mathematical reasoning, and function calling capabilities. It achieves 84% on MMLU, 92% on HumanEval, and 93% on GSM8K benchmarks while maintaining concise output generation.

Mistral NeMo 12B is a transformer-based language model developed collaboratively by Mistral AI and NVIDIA, featuring 12 billion parameters and a 128,000-token context window. The model incorporates grouped query attention, quantization-aware training for FP8 inference, and utilizes the custom Tekken tokenizer for improved multilingual and code compression efficiency. Available in both base and instruction-tuned variants, it demonstrates competitive performance on standard benchmarks while supporting function calling and multilingual capabilities across numerous languages including English, Chinese, Arabic, and various European languages.

Llama 3.1 70B is a transformer-based decoder language model developed by Meta with 70 billion parameters, trained on approximately 15 trillion tokens with a 128K context window. The model supports eight languages and demonstrates competitive performance across benchmarks for reasoning, coding, mathematics, and multilingual tasks. It is available under the Llama 3.1 Community License Agreement for research and commercial applications.

Gemma 2 9B is an open-weights decoder-only transformer language model developed by Google as part of the Gemma family. Trained on 8 trillion tokens using TPUv5p infrastructure, the model supports English text generation, question answering, and summarization tasks. Available in both pre-trained and instruction-tuned versions with bfloat16 precision, it demonstrates competitive performance on benchmarks like MMLU and coding evaluations while incorporating safety filtering mechanisms.

DeepSeek Coder V2 Lite is an open-source Mixture-of-Experts code language model featuring 16 billion total parameters with 2.4 billion active parameters during inference. The model supports 338 programming languages, processes up to 128,000 tokens of context, and demonstrates competitive performance on code generation benchmarks including 81.1% accuracy on Python HumanEval tasks.

Qwen2-72B is a 72.71 billion parameter Transformer-based language model developed by Alibaba Cloud, featuring Group Query Attention and SwiGLU activation functions. The model demonstrates strong performance across diverse benchmarks including MMLU (84.2), HumanEval (64.6), and GSM8K (89.5), with multilingual capabilities spanning 27 languages and extended context handling up to 128,000 tokens for specialized applications.

Yi 1.5 34B is a 34.4 billion parameter decoder-only Transformer language model developed by 01.AI, featuring Grouped-Query Attention and SwiGLU activations. Trained on 3.1 trillion bilingual tokens, it demonstrates capabilities in reasoning, mathematics, and code generation, with variants supporting up to 200,000 token contexts and multimodal understanding through vision-language extensions.

DeepSeek V2 is a large-scale Mixture-of-Experts language model with 236 billion total parameters, activating only 21 billion per token. It features Multi-head Latent Attention for reduced memory usage and supports context lengths up to 128,000 tokens. Trained on 8.1 trillion tokens with emphasis on English and Chinese data, it demonstrates competitive performance across language understanding, code generation, and mathematical reasoning tasks while achieving significant efficiency improvements over dense models.

Phi-3 Mini Instruct is a 3.8 billion parameter instruction-tuned language model developed by Microsoft using a dense decoder-only Transformer architecture. The model supports a 128,000 token context window and was trained on 4.9 trillion tokens of high-quality data, followed by supervised fine-tuning and direct preference optimization. It demonstrates competitive performance in reasoning, mathematics, and code generation tasks among models under 13 billion parameters, with particular strengths in long-context understanding and structured output generation.

Llama 3 8B is an open-weights transformer-based language model developed by Meta, featuring 8 billion parameters and trained on over 15 trillion tokens. The model utilizes grouped-query attention and a 128,000-token vocabulary, supporting 8,192-token context lengths. Available in both pretrained and instruction-tuned variants, it demonstrates capabilities in text generation, code completion, and conversational tasks across multiple languages.

Llama 4 Scout (17Bx16E) is a multimodal large language model developed by Meta using a Mixture-of-Experts transformer architecture with 109 billion total parameters and 17 billion active parameters per token. The model features a 10 million token context window, supports text and image understanding across multiple languages, and was trained on approximately 40 trillion tokens with an August 2024 knowledge cutoff.

Command R+ v01 is a 104-billion parameter open-weights language model developed by Cohere, optimized for retrieval-augmented generation, tool use, and multilingual tasks. The model features a 128,000-token context window and specializes in generating outputs with inline citations from retrieved documents. It supports automated tool calling, demonstrates competitive performance across standard benchmarks, and includes efficient tokenization for non-English languages, making it suitable for enterprise applications requiring factual accuracy and transparency.

Command R v01 is a 35-billion-parameter transformer-based language model developed by Cohere, featuring retrieval-augmented generation with explicit citations, tool use capabilities, and multilingual support across ten languages. The model supports a 128,000-token context window and demonstrates performance in enterprise applications, multi-step reasoning tasks, and long-context evaluations, though it requires commercial licensing for enterprise use.

Playground v2.5 Aesthetic is a diffusion-based text-to-image model that generates images at 1024x1024 resolution across multiple aspect ratios. Developed by Playground and released in February 2024, it employs the EDM training framework and human preference alignment techniques to improve color vibrancy, contrast, and human feature rendering compared to its predecessor and other open-source models like Stable Diffusion XL.

Stable Cascade Stage B is an intermediate latent super-resolution component within Stability AI's three-stage text-to-image generation system built on the Würstchen architecture. It operates as a diffusion model that upscales compressed 16×24×24 latents from Stage C to 4×256×256 representations, preserving semantic content while restoring fine details. Available in 700M and 1.5B parameter versions, Stage B enables the system's efficient 42:1 compression ratio and supports extensions like ControlNet and LoRA for enhanced creative workflows.

Stable Video Diffusion XT 1.1 is a latent diffusion model developed by Stability AI that generates 25-frame video sequences at 1024x576 resolution from single input images. The model employs a three-stage training process including image pretraining, video training on curated datasets, and high-resolution finetuning, enabling motion synthesis with configurable camera controls and temporal consistency for image-to-video transformation applications.

Qwen 1.5 72B is a 72-billion parameter transformer-based language model developed by Alibaba Cloud's Qwen Team and released in February 2024. The model supports a 32,768-token context window and demonstrates strong multilingual capabilities across 12 languages, achieving competitive performance on benchmarks including MMLU (77.5), C-Eval (84.1), and GSM8K (79.5). It features alignment optimization through Direct Policy Optimization and Proximal Policy Optimization techniques, enabling effective instruction-following and integration with external systems for applications including retrieval-augmented generation and code interpretation.

The SDXL Motion Model is an AnimateDiff-based video generation framework that adds temporal animation capabilities to existing text-to-image diffusion models. Built for compatibility with SDXL at 1024×1024 resolution, it employs a plug-and-play motion module trained on video datasets to generate coherent animated sequences while preserving the visual style of the underlying image model.

Phi-2 is a 2.7 billion parameter Transformer-based language model developed by Microsoft Research and released in December 2023. The model was trained on approximately 1.4 trillion tokens using a "textbook-quality" data approach, incorporating synthetic data from GPT-3.5 and filtered web sources. Phi-2 demonstrates competitive performance in reasoning, language understanding, and code generation tasks compared to larger models in its parameter class.

Mixtral 8x7B is a sparse Mixture of Experts language model developed by Mistral AI and released under the Apache 2.0 license in December 2023. The model uses a decoder-only transformer architecture with eight expert networks per layer, activating only two experts per token, resulting in 12.9 billion active parameters from a total 46.7 billion. It demonstrates competitive performance on benchmarks including MMLU, achieving multilingual capabilities across English, French, German, Spanish, and Italian while maintaining efficient inference speeds.

Playground v2 Aesthetic is a latent diffusion text-to-image model developed by playgroundai that generates 1024x1024 pixel images using dual pre-trained text encoders (OpenCLIP-ViT/G and CLIP-ViT/L). The model achieved a 7.07 FID score on the MJHQ-30K benchmark and demonstrated a 2.5x preference rate over Stable Diffusion XL in user studies, focusing on high-aesthetic image synthesis with strong prompt alignment.

Stable Video Diffusion XT is a generative AI model developed by Stability AI that extends the Stable Diffusion architecture for video synthesis. The model supports image-to-video and text-to-video generation, producing up to 25 frames at resolutions supporting 3-30 fps. Built on a latent video diffusion architecture with over 1.5 billion parameters, SVD-XT incorporates temporal modeling layers and was trained using a three-stage methodology on curated video datasets.

Yi 1 34B is a bilingual transformer-based language model developed by 01.AI, trained on 3 trillion tokens with support for both English and Chinese. The model features a 4,096-token context window and demonstrates competitive performance on multilingual benchmarks including MMLU, CMMLU, and C-Eval, with variants available including extended 200K context and chat-optimized versions released under Apache 2.0 license.

MusicGen is a text-to-music generation model developed by Meta's FAIR team as part of the AudioCraft library. The model uses a two-stage architecture combining EnCodec neural audio compression with a transformer-based autoregressive language model to generate musical audio from textual descriptions or melody inputs. Trained on approximately 20,000 hours of licensed music, MusicGen supports both monophonic and stereophonic outputs and demonstrates competitive performance in objective and subjective evaluations against contemporary music generation models.

Vocos is a neural vocoder developed by GemeloAI that employs a Fourier-based architecture to generate Short-Time Fourier Transform spectral coefficients rather than directly modeling time-domain waveforms. The model supports both mel-spectrogram and neural audio codec token inputs, operates under the MIT license, and demonstrates computational efficiency through its use of inverse STFT for audio reconstruction while achieving competitive performance metrics on speech and music synthesis tasks.

CodeLlama 34B is a large language model developed by Meta that builds upon Llama 2's architecture and is optimized for code generation, understanding, and programming tasks. The model supports multiple programming languages including Python, C++, Java, and JavaScript, with an extended context window of up to 100,000 tokens for handling large codebases. Available in three variants (Base, Python-specialized, and Instruct), it achieved 53.7% accuracy on HumanEval and 56.2% on MBPP benchmarks, demonstrating capabilities in code completion, debugging, and natural language explanations.

Llama 2 7B is a transformer-based language model developed by Meta with 7 billion parameters, trained on 2 trillion tokens with a 4,096-token context length. The model supports text generation in English and 27 other languages, with chat-optimized variants fine-tuned using supervised learning and reinforcement learning from human feedback for dialogue applications.

Llama 2 70B is a 70-billion parameter transformer-based language model developed by Meta, featuring Grouped-Query Attention and a 4096-token context window. Trained on 2 trillion tokens with a September 2022 cutoff, it demonstrates strong performance across language benchmarks including 68.9 on MMLU and 37.5 pass@1 on code generation tasks, while offering both pretrained and chat-optimized variants under Meta's commercial license.

Bark is a transformer-based text-to-audio model that generates multilingual speech, music, and sound effects by converting text directly to audio tokens using EnCodec quantization. The model supports over 13 languages with 100+ speaker presets and can produce nonverbal sounds like laughter through special tokens, operating via a three-stage pipeline from semantic to fine audio tokens.

LLaMA 13B is a transformer-based language model developed by Meta as part of the LLaMA model family, featuring 13 billion parameters and trained on 1.4 trillion tokens from publicly available datasets. The model incorporates architectural optimizations including RMSNorm, SwiGLU activation functions, and rotary positional embeddings, achieving competitive performance with larger models while maintaining efficiency. Released under a noncommercial research license, it demonstrates capabilities across language understanding, reasoning, and code generation benchmarks.

LLaMA 65B is a 65.2 billion parameter transformer-based language model developed by Meta and released in February 2023. The model utilizes architectural optimizations including RMSNorm pre-normalization, SwiGLU activation functions, and rotary positional embeddings. Trained exclusively on 1.4 trillion tokens from publicly available datasets including CommonCrawl, Wikipedia, GitHub, and arXiv, it demonstrates competitive performance across natural language understanding benchmarks while being distributed under a non-commercial research license.

Stable Diffusion 2 is an open-source text-to-image diffusion model developed by Stability AI that generates images at resolutions up to 768×768 pixels using latent diffusion techniques. The model employs an OpenCLIP-ViT/H text encoder and was trained on filtered subsets of the LAION-5B dataset. It includes specialized variants for inpainting, depth-conditioned generation, and 4x upscaling, offering improved capabilities over earlier versions while maintaining open accessibility for research applications.

Stable Diffusion 1.5 is a latent text-to-image diffusion model that generates 512x512 images from text prompts using a U-Net architecture conditioned on CLIP text embeddings within a compressed latent space. Trained on LAION dataset subsets, the model supports text-to-image generation, image-to-image translation, and inpainting tasks, released under the CreativeML OpenRAIL-M license for research and commercial applications.

Stable Diffusion 1.1 is a latent text-to-image diffusion model developed by CompVis, Stability AI, and Runway that generates images from natural language prompts. The model uses a VAE to compress images into latent space, a U-Net for denoising, and a CLIP text encoder for conditioning. Trained on LAION dataset subsets at 512×512 resolution, it supports text-to-image generation, image-to-image translation, and inpainting applications while operating efficiently in compressed latent space.

Kimi K2 is an open-source mixture-of-experts language model developed by Moonshot AI, featuring 1 trillion total parameters with 32 billion activated per inference. The model utilizes a 128,000-token context window and specializes in agentic intelligence, tool use, and autonomous reasoning capabilities. Trained on 15.5 trillion tokens with reinforcement learning techniques, it demonstrates performance across coding, mathematical reasoning, and multi-step task execution benchmarks.

Gemma 3n E4B is a multimodal generative AI model developed by Google DeepMind with 8 billion raw parameters yielding 4 billion effective parameters. Built on the MatFormer architecture for mobile and edge deployment, it processes text, image, audio, and video inputs to generate text outputs. The model features elastic inference capabilities, allowing extraction of smaller sub-models for faster performance, and supports over 140 languages with demonstrated proficiency in reasoning, coding, and multilingual tasks.

DeepSeek R1 (0528) is a large language model developed by DeepSeek-AI featuring 671 billion total parameters with 37 billion activated during inference. Built on the DeepSeek-V3-Base architecture using Mixture-of-Experts design, it employs Group Relative Policy Optimization and multi-stage training with reinforcement learning to enhance reasoning capabilities. The model supports 128,000 token context length and demonstrates improved performance on mathematical, coding, and reasoning benchmarks compared to its predecessors.

Qwen3-0.6B is a dense language model with 0.6 billion parameters developed by Alibaba Cloud, featuring a 28-layer transformer architecture with Grouped Query Attention. The model supports dual thinking modes for adaptive reasoning and general dialogue, processes up to 32,768 tokens context length, and demonstrates multilingual capabilities across over 100 languages. It utilizes strong-to-weak distillation from larger Qwen3 models and is released under Apache 2.0 license.

Qwen3-4B is a 4.0 billion parameter transformer language model developed by Alibaba Cloud, featuring dual reasoning modes that allow users to toggle between detailed step-by-step thinking and rapid response generation. Released under Apache 2.0 license, the model supports 32,768 token contexts, demonstrates strong performance across mathematical reasoning and coding benchmarks, and incorporates advanced training techniques including strong-to-weak distillation from larger teacher models.

Qwen3-14B is a dense transformer language model developed by Alibaba Cloud with 14.8 billion parameters, featuring hybrid "thinking" and "non-thinking" reasoning modes that can be controlled via prompts. The model supports 119 languages, extends to 131k token contexts through YaRN scaling, and includes agent capabilities with tool-use functionality, all released under Apache 2.0 license.

Qwen3-30B-A3B is a Mixture-of-Experts language model developed by Alibaba Cloud featuring 30.5 billion total parameters with 3.3 billion activated per token. The model employs hybrid reasoning modes that allow dynamic switching between step-by-step thinking for complex tasks and rapid responses for simpler queries. It supports 119 languages, extends to 131,072 tokens context length, and utilizes strong-to-weak distillation from larger Qwen3 models for efficient deployment while maintaining competitive performance on reasoning, coding, and multilingual benchmarks.

HiDream I1 Full is an open-source image generation model developed by HiDream.ai featuring a 17 billion parameter sparse Diffusion Transformer architecture with Mixture-of-Experts design. The model employs hybrid text encoding combining Long-CLIP, T5-XXL, and Llama 3.1 8B components for precise text-to-image synthesis. It demonstrates strong performance on industry benchmarks and supports diverse visual styles through flow-matching in latent space.

Mistral Small 3.1 (2503) is a 24-billion parameter transformer-based model developed by Mistral AI and released under Apache 2.0 license. This multimodal and multilingual model processes both text and visual inputs with a context window of 128,000 tokens using the Tekken tokenizer. It demonstrates competitive performance on academic benchmarks including MMLU and GPQA while supporting function calling and structured output generation for automation workflows.

Gemma 3 4B is a multimodal instruction-tuned model developed by Google DeepMind that processes text and image inputs to generate text outputs. The model features a decoder-only transformer architecture with approximately 4.3 billion parameters, supports context windows up to 128,000 tokens, and operates across over 140 languages. It incorporates a SigLIP vision encoder for image processing and utilizes grouped-query attention with interleaved local and global attention layers for efficient long-context handling.

Gemma 3 27B is a multimodal generative AI model developed by Google DeepMind that processes both text and image inputs to produce text outputs. Built on a decoder-only transformer architecture with 27 billion parameters, it incorporates a SigLIP vision encoder and supports context lengths up to 128,000 tokens. The model was trained on over 14 trillion tokens and demonstrates competitive performance across language, coding, mathematical reasoning, and vision-language tasks.

QwQ 32B is a 32.5-billion parameter causal language model developed by Alibaba Cloud as part of the Qwen series. The model employs a transformer architecture with 64 layers and Grouped Query Attention, trained using supervised fine-tuning and reinforcement learning focused on mathematical reasoning and coding proficiency. Released under Apache 2.0 license, it demonstrates competitive performance on reasoning benchmarks despite its relatively compact size.

Wan 2.1 I2V 14B 480P is an image-to-video generation model developed by Wan-AI featuring 14 billion parameters and operating at 480P resolution. Built on a diffusion transformer architecture with T5-based text encoding and a 3D causal variational autoencoder, the model transforms static images into temporally coherent video sequences guided by textual prompts, supporting both Chinese and English text rendering within its generative capabilities.

Wan 2.1 T2V 14B is a 14-billion parameter video generation model developed by Wan-AI that creates videos from text descriptions or images. The model employs a spatio-temporal variational autoencoder and diffusion transformer architecture to generate content at 480P and 720P resolutions. It supports multiple languages including Chinese and English, handles various video generation tasks, and demonstrates computational efficiency across different hardware configurations when deployed for research applications.

Qwen2.5 VL 7B is a 7-billion parameter multimodal language model developed by Alibaba Cloud that processes text, images, and video inputs. The model features a Vision Transformer with dynamic resolution support and Multimodal Rotary Position Embedding for spatial-temporal understanding. It demonstrates capabilities in document analysis, OCR, object detection, video comprehension, and structured output generation across multiple languages, released under Apache-2.0 license.

Lumina Image 2.0 is a 2 billion parameter text-to-image generative model developed by Alpha-VLLM that utilizes a flow-based diffusion transformer architecture. The model generates high-fidelity images up to 1024x1024 pixels from textual descriptions, employs a Gemma-2-2B text encoder and FLUX-VAE-16CH variational autoencoder, and is released under the Apache-2.0 license with support for multiple inference solvers and fine-tuning capabilities.

MiniMax Text 01 is an open-source large language model developed by MiniMaxAI featuring 456 billion total parameters with 45.9 billion active per token. The model employs a hybrid attention mechanism combining Lightning Attention with periodic Softmax Attention layers across 80 transformer layers, utilizing a Mixture-of-Experts design with 32 experts and Top-2 routing. It supports context lengths up to 4 million tokens during inference and demonstrates competitive performance across text generation, reasoning, and coding benchmarks.

DeepSeek-VL2 is a series of Mixture-of-Experts vision-language models developed by DeepSeek-AI that integrates visual and textual understanding through a decoder-only architecture. The models utilize a SigLIP vision encoder with dynamic tiling for high-resolution image processing, coupled with DeepSeekMoE language components featuring Multi-head Latent Attention. Available in three variants with 1.0B, 2.8B, and 4.5B activated parameters, the models support multimodal tasks including visual question answering, optical character recognition, document analysis, and visual grounding capabilities.

DeepSeek VL2 Tiny is a vision-language model from Deepseek AI that activates 1.0 billion parameters using Mixture-of-Experts architecture. The model combines a SigLIP vision encoder with a DeepSeekMoE-based language component to handle multimodal tasks including visual question answering, optical character recognition, document analysis, and visual grounding across images and text.

Llama 3.3 70B is a 70-billion parameter transformer-based language model developed by Meta, featuring instruction tuning through supervised fine-tuning and reinforcement learning from human feedback. The model supports a 128,000-token context window, incorporates Grouped-Query Attention for enhanced inference efficiency, and demonstrates multilingual capabilities across eight validated languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.